Lighthouse LabsW10D5 - LLM Deployment & LLM EthicsInstructor: Socorro E. Dominguez-Vidana |

|

Overview¶

- [] Whose Role is it?

- [] LLM Deployment

- [] Deployment Patterns

- [] Demo

- [] LLM Ethics

- [] Ethical Considerations

- [] Potential Unethical Use Cases

- [] Model Cards

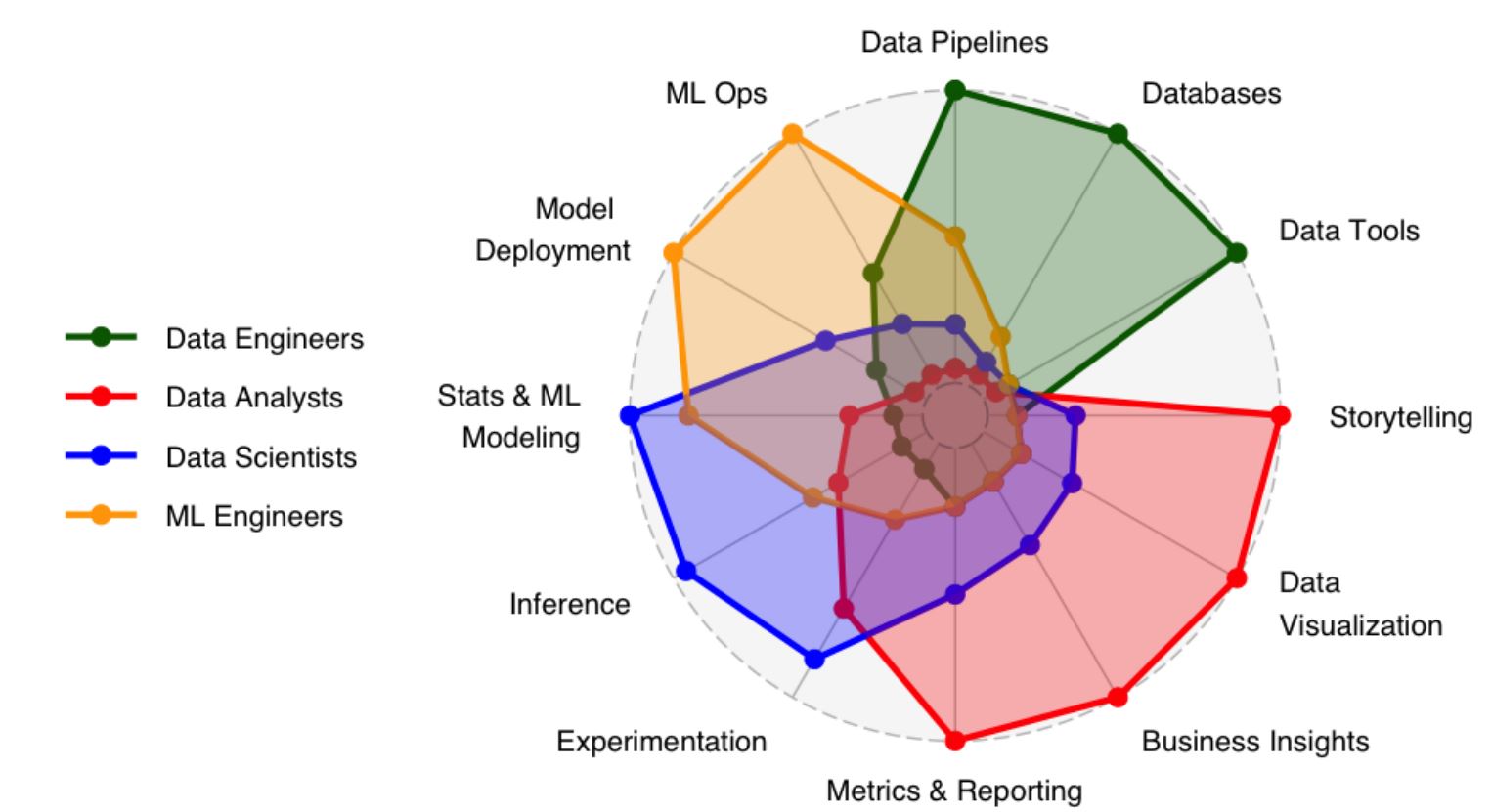

Deployment is a TEAM effort¶

- Besides technical roles, you also need:

- Ethics / Compliance Officers

- Legal departments

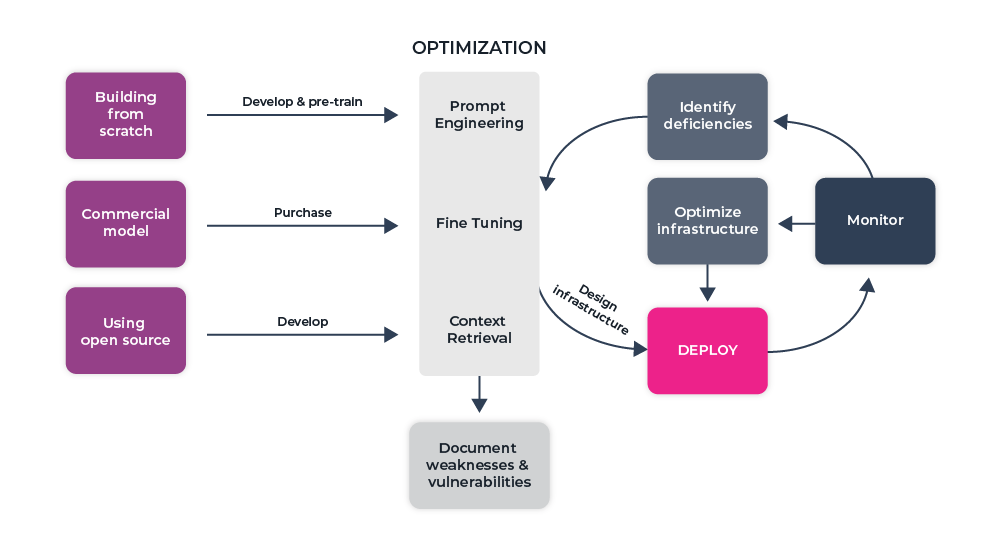

Deployment Patterns¶

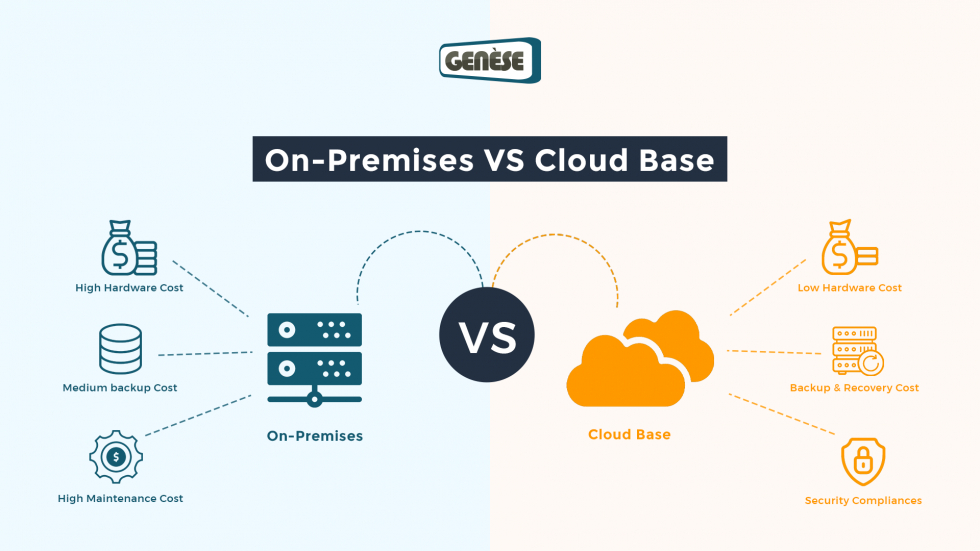

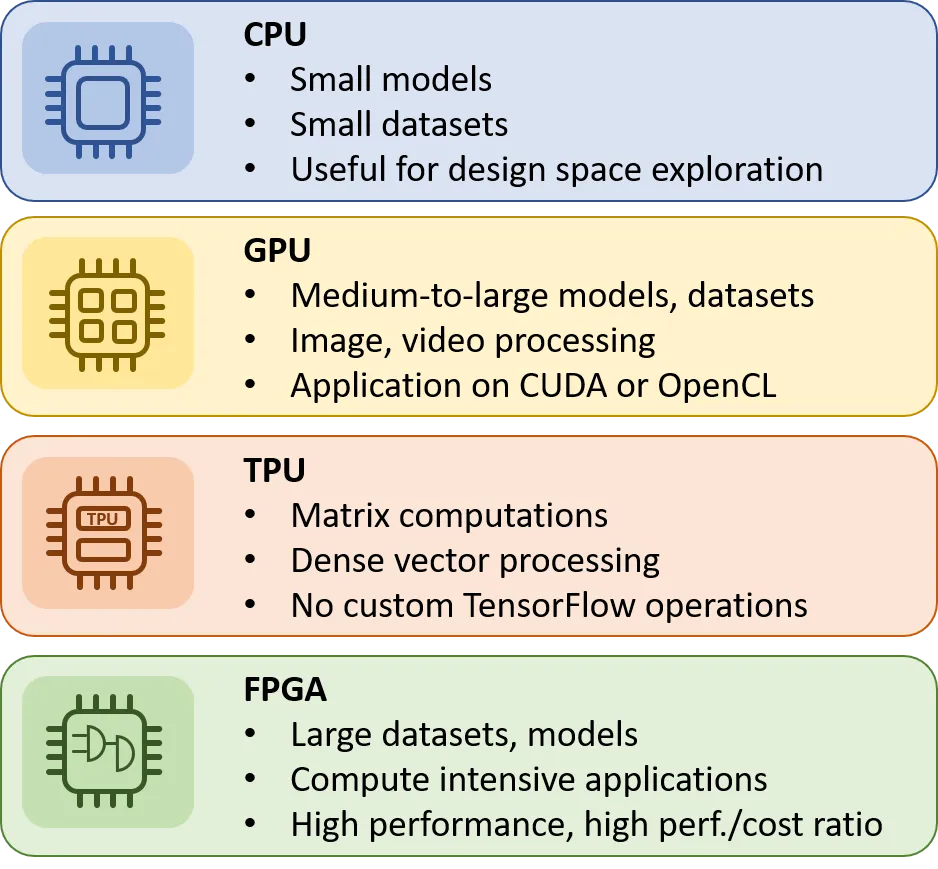

Deployment Options¶

This will depend on the usecase:

- Real time

- Serverless

- Batch transfroms

- Asynchronous

Demo - Deployment¶

References:¶

Original Slides authored by: Siphu Langeni

Ethics¶

Responsible AI¶

“Responsible AI RAI ensures that the development and deployment of AI systems aligns with ethical principles and values, including transparency and accountability. RAI principles and best practices help reduce the potential negative impacts of AI caused by machine bias.”

H2O.ai. (n.d.). Responsible AI overview. H2O.ai. Retrieved June 17, 2024

Pilars of Responsible AI¶

1. Bias and Fairness¶

- AI systems can inherit biases from training data or algorithms, leading to unfair outcomes (e.g., gender, racial, or cultural biases).

- Actions:

- Evaluate datasets for bias and ensure diversity.

- Use fairness-aware algorithms and tools to mitigate bias.

- Regularly audit AI systems to identify and address biases.

- Remove features that are not necessary.

2. Privacy and Data Protection¶

- AI processes large amounts of personal and sensitive data, raising privacy concerns.

- Actions:

- Follow data protection laws (depending on your region).

- Anonymize and encrypt data to protect user privacy.

- Clearly communicate how user data will be collected, stored, and used.

3. Transparency and Explainability¶

- Many AI systems (e.g., deep learning models) operate as "black boxes," making their decisions hard to interpret.

- Actions:

- Use explainable AI (XAI) techniques to ensure decisions are understandable.

- Provide documentation on how AI systems make decisions.

- Be transparent with stakeholders about AI capabilities and limitations.

4. Accountability and Responsibility¶

- It is crucial to assign accountability for the outcomes of AI systems, especially when harm occurs.

- Actions:

- Define roles and responsibilities for AI developers, deployers, and users.

- Establish processes to address issues caused by AI (e.g., incorrect decisions or harm).

- Regularly monitor AI performance to ensure compliance with ethical standards.

5. Safety and Security¶

- AI systems must be designed to ensure the safety of users and prevent misuse.

- Actions:

- Protect AI models from adversarial attacks or hacking.

- Ensure AI systems perform reliably and predictably, especially in critical applications like healthcare or autonomous vehicles.

- Test AI systems rigorously for edge cases and failures.

6. Human Oversight¶

- AI systems should complement human decision-making rather than fully replacing it, especially in sensitive areas like law enforcement or healthcare.

- Actions:

- Include mechanisms for human review of AI decisions.

- Ensure humans remain in control of AI systems.

- Promote AI as a decision-support tool rather than an autonomous agent.

7. Beneficence and Avoiding Harm¶

- AI should be used to create positive outcomes and avoid causing harm.

- Actions:

- Evaluate potential risks and harms before deploying AI systems.

- Consider the societal impact of AI on individuals and communities.

- Avoid using AI for unethical purposes (e.g., surveillance without consent, weaponization).

8. Inclusivity and Accessibility¶

- AI should be designed to be accessible to diverse populations and not exclude certain groups.

- Actions:

- Ensure AI systems are inclusive in their design and testing.

- Address disparities in access to AI technologies.

- Promote AI tools that improve accessibility for people with disabilities.

9. Environmental Impact¶

- AI development and training (e.g., large-scale models) consume significant energy resources.

- Actions:

- Optimize AI models to reduce energy consumption.

- Prioritize sustainability in AI infrastructure.

- Consider the environmental costs of large-scale data processing.

10. Misuse and Malicious Applications¶

- AI can be misused for disinformation, deepfakes, fraud, or surveillance.

- Actions:

- Develop safeguards against misuse.

- Promote policies and guidelines that discourage malicious AI applications.

- Educate users and stakeholders about ethical AI usage.

Some unfortunate incidents:¶

Amazon’s AI Recruitment Tool (2018)¶

In 2018, Amazon developed an AI recruitment tool designed to automate and streamline the hiring process. However, the system was found to exhibit gender bias. The algorithm penalized resumes that contained words associated with women, such as “women’s chess club captain” or resumes from all-women’s colleges.

This happened because the AI model was trained on historical hiring data, which reflected a male-dominated hiring trend over a decade. As a result, the AI learned to favor resumes that matched these biased patterns, perpetuating gender discrimination.

Tom Cruise Deepfake Videos (2021)¶

In 2021, videos of a deepfake Tom Cruise went viral on TikTok, created by a visual effects expert. The videos looked shockingly realistic, showing “Tom Cruise” performing magic tricks, golfing, and speaking casually. While the videos were entertaining, they highlighted how advanced deepfake technology can be misused to impersonate public figures, spread misinformation, or harm reputations.

Cambridge Analytica and Facebook Ads (2016)¶

During the 2016 U.S. presidential election, Cambridge Analytica used data from Facebook to create targeted propaganda ads. These ads aimed to influence voter behavior through tailored political messaging and emotional appeals. The misuse of personal data and AI algorithms amplified political polarization and misinformation, showcasing AI's role in modern propaganda.

AI-Generated Content Misused by Students¶

With the rise of tools like ChatGPT, some students have submitted AI-generated essays or assignments as their own work. These instances are flagged as plagiarism because the text lacks original thought and effort.

Model Cards¶

Document key aspects of ML learning models

“We propose model cards as a step towards the responsible democratization of machine learning and related artificial intelligence technology, increasing transparency into how well artificial intelligence technology works.”

Llama 2 by Meta¶

- 7B - 13B - 70B parameter open source LLM released by Meta in July 2023

- Free for research and commercial use

- Base model pre-trained on publicly available online data sources

- Chat model leverages publicly available instruction datasets and > 1M human annotations

Conclusion¶

"To whom much is given, much is expected"

- Deploying LLMs at scale can be challenging yet very rewarding!

- Ethical and responsible AI goes from data to deployment.

- Make a habit of including good documentation of your model.